Containing the excitement

Why dynamic containerization and virtualization are increasingly the systems of choice for FIs

Today, monolithic applications among financial firms continue to be superseded by “dynamic” containerization and virtualization-based systems.

Containerization is a software deployment technology that allows developers to package software and applications in code and run them in isolated computerised environments containing all the necessary files, configurations, libraries, and binaries needed to run that particular application.

Virtualization is the process of partitioning a physical server into multiple virtual servers. After partitioning, the virtual servers act and perform just as a physical server. Essentially, it means squeezing more workload onto each server, meaning the same hardware set-up is used more efficiently, thereby freeing the resources for other tasks.

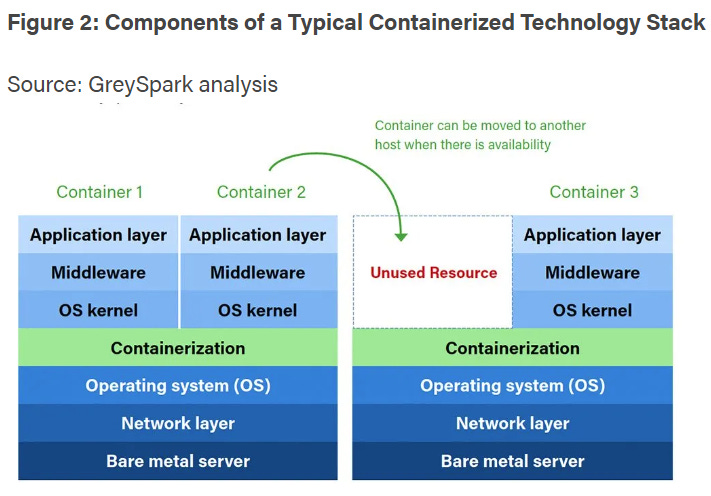

GreySpark depicts the containerization and virtualization processes below.

Source: GreySpark analysis

Both techniques enable the abstraction of one level of the technology stack from the one beneath it – a buffer, you could say – allowing software running above the abstraction layer to be moved around, typically from server to server as memory, storage or compute power become available.

Essentially, though, containerization is about abstracting an operating system and running an app. Virtualization is about abstracting hardware and running an operating system.

Cloud services based on containerization and virtualization are currently well established in financial firms. Due to the dynamic nature of these approaches, both containerization and virtualization allow for greater flexibilities, efficiencies and improved resource utilisation among IT systems. For instance, containers can be spun up and taken down within seconds, if needed, which means that scalability is unmatched.

A key difference between a container and a virtual machine is that when the virtual machine is moved it needs to take with it a full copy of the operating system, whereas containers only need to take a stripped-down version of the operating system with them. In general, this means that a lot more memory, storage and compute power are needed to run virtual machines than containers.

Nevertheless, firms need to be mindful of the increasing complexities involved with implementing and monitoring containerization and virtualization models.

In a container environment, multiple containers can be running on the same host, which can make it difficult to isolate issues caused by a specific container and an application’s containers can be spread across multiple hosts, making it difficult to monitor them all from a central location. For example, some basic container functionalities may be run in the cloud, but the authentication and payments functionality may be incorporated into containers that run on on-premises servers. Legacy systems may also make it difficult to support dynamic architectures, while regulation may also place constraints on usage.

Given the intricacies involved in containerization systems, it’s essential that adequate monitoring processes and architectures are up to scratch. Failing that, troubleshooting becomes increasingly difficult, and small issues can quickly develop into critical issues.

Kubernetes is a key container orchestration (or management) system that provides functionalities such as automated software deployment, scaling and management, and resourcing. The Kubernetes platform is vital to the operation of containers as it orchestrates, creates and destroys them and is vital to ensure that a certain level of service is maintained. If a container fails, Kubernetes will automatically spin up another one. Those failures can be captured, and converted into events and metrics that can tell the system administrator of the failure of a container so that she can investigate and diagnose the issue

However, it is typically in the best interests of firms to ensure that the Kubernetes platform itself is monitored, helping to provide an extra layer of surveillance. Only with comprehensive monitoring across the entire application technology stack, from the big picture (resource utilization) to the smallest detail (health of the containers), will systems administrators have the necessary visibility of what is happening in the enterprise in order to troubleshoot issues and maintain its smooth operation.

As highlighted in our recent insights post, ITRS Geneos supports the most complex and interconnected IT estates, providing real-time monitoring for transactions, processes, applications and infrastructure across on-premises, cloud and highly dynamic containerized environments.